It’s Monday and that means it’s time to get fired up about the week. What better way to kick things off than with a quick conversation about robots?!

It’s Monday and that means it’s time to get fired up about the week. What better way to kick things off than with a quick conversation about robots?!

Yes, ROBOTS!

Not our friendly bot in the picture though, I’m talking about your robots.txt file. Wait, don’t panic! It’s ok if you don’t know what the heck I’m talking about. We’ll start slow and by the end of this post you will know exactly what a robots.txt file is. I want you to be able to throw down in some serious web geek conversations. Why would you want to do that? Because the robots.txt file controls how search engines and other bots see your site. You probably spend a LOT of time carefully crafting your brand and content, but what’s the point if search engines aren’t viewing your content or are seeing too much of it? Let’s fix that.

Your robots.txt is a text file that is housed at [yourdomain].com/robots.txt. Go ahead and check to see if your site has a robots.txt. Go for it, I’ll wait!

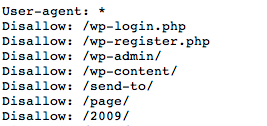

Did you find a file there? If you did, it probably looked something like this:

If you found a 404 page or were redirected to another page of your site or there was simply an error message, then your robots.txt doesn’t appear to exist or is setup incorrectly. If that’s the case, I still want you to see what a robots.txt file looks like, so go scope out a robots.txt on another site. I love visiting the robots.txt files on these sites (yes, I’m a freak):

- https://www.whitehouse.gov/robots.txt

- https://www.cnn.com/robots.txt

- https://www.nytimes.com/robots.txt

- https://www.amazon.com/robots.txt

- http://www.ebay.com/robots.txt

- https://www.bestbuy.com/robots.txt

- https://www.google.com/robots.txt

- https://www.bing.com/robots.txt

- https://www.yahoo.com/robots.txt

- https://www.apple.com/robots.txt

- https://www.microsoft.com/robots.txt

As you can see, robots.txt files come in all shapes and sizes! Why is that? Every site has a different way it’s constructed from the content management system to the server to the code to the shopping cart–there are a ton of variables that can impact the way your site is constructed and appears.

Why does this matter? It matters to web robots. Weeee, robots!

Search engines only know about your site because they are able to (or not able to) access it. While visitors can click freely from page to page of your site (assuming there aren’t logins or other secure areas they have pass through first), robots *should* follow instructions you give them about what to access and how often. The robots.txt is this set of instructions.

If you’re more technical, bear with me, while I give an overly simplified break down of robots and the robots.txt file. I want our readers to walk before they run!

There are all kinds of robots online, some good and some not so good. This may sound a little like Terminator (good vs evil bots) and it is, just without the hostile takeover of humanity (yet). Every search engine has robots that are used to crawl web sites, which is how search engines like Google or Bing are able to crawl content and return search results. Without these robots, they wouldn’t have content to deliver to a searcher. There are “bad” robots that crawl sites for more nefarious purposes or they simply crawl without any care in the world for crawl rates. This disrespect can quickly eat up your bandwidth if not managed well, but is usually not a major problem for smaller sites.

Google’s most famous robot is called Googlebot and you can learn more about it here.

Bing’s robot is called… wait for it… bingbot. Duane Forrester gives us the skinny on bingbot (video in Silverlight, because they’re Microsoft!):

There’s a lot to know about robots.txt files, so I don’t want to overload your brain too much. Let’s just cover the most common robots.txt commands. Now you’ll be able to both read and eventually write your own files without having to bribe the IT team.

How to Use Your Robots.txt

User-agent:

This specifies the type of robot that you want to command. Your robots.txt file will start with this command 99% of the time (the only exception will be if you see a comment or a sitemap: listed above it). Not sure which robot you want to give instructions to? That’s ok. Leave it set to “all” as the default. You do this by writing the following where “*” means “all robots:”

Allow:

This is exactly what it sounds like. You’re telling robots that you want to allow certain content. You do NOT need to tell robots every page or folder of your site that you want to allow. By default, robots will freely crawl your site. The only instance when you might want to use this is if you’re worried they might not otherwise access a particular page or folder because of another command. For example, if you want to disallow a certain folder, you might want to tell the robots that you still want them to be able to access a certain page in that folder.

Disallow:

The disallow does the opposite of allow. You’re telling the robots that you don’t want them to access a certain page. They can still see the page, but they won’t crawl it. This is probably the most common command for the robots.txt file because it’s how we tell a robot that we don’t want them to look at a junk folder or a set of URLs that shouldn’t exist, but for some technical reason do today. Note: try to always correct the problem at the source rather than using the robots.txt as a band-aid.

Noindex:

The noindex tells robots that you want content removed from their search results. It’s important to use the noindex in situations where you have old content that is returning an error message or folders and areas of your site that you do not want to appear to searchers though you may want to give your users access.

For example, many web developers will build a development version of your new site or redesign on their own domain. If they don’t noindex the folder where your site appears, suddenly there is a duplicate version of your site appearing on their domain!

NO!!! Do not let this happen.

Make sure the folder where your dev site appears has been both disallowed and noindex. This means you do not want the search engines to see the content OR index it. Make sense?

Sitemap:

This is a command that tells the search engines where to access your XML sitemap file. If you’ve submitted your sitemap through their webmaster tool areas then it’s less vital that you have this command, but I always like to include it, so the bots have no question about where to find my file.

Robots.txt warning:

Folder structure matters! Using the same example as the developer working on your redesign, let’s pretend they need to noindex and disallow your site, which is located two folders deep at: [theirdomain].com/portfolio/[yoursite]/

They cannot simply specify /[yoursite]/ as the folder to noindex and disallow. I see this happen often! Someone wants to remove a certain folder, so (using the example above), they’ll write the following:

Noindex: /[yoursite]/

Guess what? That isn’t good enough! What you told the robots to do is to disallow and noindex a folder in the ROOT of your domain called /[yoursite]/, but your site isn’t located there. This is absolutely not the same thing as /portfolio/[yoursite]. To ensure that you’re disallowing and noindexing the right folder, you would have to do one of the following:

Option 1:

Noindex: /portfolio/[yoursite]/

Option 2:

Noindex: /*/[yoursite]/

Remember when we told the user-agent that we wanted all robots to listen up? How did we do that? We used the asterisk (*). This is the same thing. The asterisk in option two is a placeholder for any folder name. It means that the robots will disallow and noindex any folder with [yoursite] in the name that appears AFTER an initial folder. Now they will be able to see your folder and not crawl or index it. Without stating the name of the first folder or giving a catch all with the asterisk, you aren’t giving the robots sufficient instructions to follow.

It’d be pretty difficult to drive somewhere with step three missing in a five step set of instructions, huh? Same thing!

FINAL WARNING: Be careful. Always validate your robots.txt file and check it before you upload or modify the file. There are horror stories in the vault of SEO lore about sites disappearing from the search results because of a penalty when the reality is they messed up a command in the robots.txt. This is a powerful little file. There is nothing more powerful than this on your site besides the usually hidden .htaccess file (we’ll save this one for another time).

We’ve only begun to scratch the surface of your robots.txt file, so if you’re loving this post, here’s even more to read from authorities far better versed in this than myself:

Robots.txt Resources

- http://www.robotstxt.org/

- http://sebastians-pamphlets.com/

- https://www.google.com/webmasters/tools/home?hl=en/home?hl=en

Click into a domain and go to “Site Configuration” then “Crawler Access.”Within Google Webmaster Central, when you click into a site, you can view blocked URLs, construct your robots.txt or remove URLs for that particular domain. You can also test how Google is able to access your site with a few of their robots: Googlebot-Mobile (crawls pages for mobile index), Googlebot-Image (crawls pages for image index), Mediapartners-Google (crawls pages to determine AdSense content), and Adsbot-Google (crawls pages to measure AdWords landing page quality).

Robots.txt Generators

- http://tools.seobook.com/robots-txt/generator/

The robots.txt file creation tool works well, but users are limited to the engines in the list and there is no download function of the file after creation.And SEOBook has a robots.txt validator, but this doesn’t appear to work well nor are the error reports very clear: http://tools.seobook.com/robots-txt/analyzer/

SEOBook also features a robots.txt analyzer, but it doesn’t seem to work very well, nor are the error reports very clear.

Have any questions? Let’s chat below in the comments. And, make it an awesome Monday!