The robots.txt file

The robot.txt file provides search crawlers like Googlebot with instructions for interacting with your site. When moving your site to HTTPS, the way in which Googlebot interacts with your site is very important.

What you need to know about your robots.txt file when moving to HTTPS

When you are planning a HTTPS switch it is important to know four specific things about your robot.txt status.

- If it exists

- What it says

- If it mentions a sitemap

- If it is blocking page resources

1. If it exists

Not every site has a robots.txt file, but it is located in the same place for all websites that use one.

To see if you have a robots.txt file, simply replace the domain above with your domain. If you have a robots.txt file you will see it at that url.

2. What it says

Many robots.txt files are very simple. A typical file that allows full access will look like this:

Disallow:

Some robots.txt files are complicated and long:

Disallow: /wp-content/uploads/2014/

Disallow: /wp-content/uploads/2015/

Disallow: /wp-login.php

Disallow: /wp-admin/

Disallow: /send-to/

Disallow: /page/

Disallow: /2015/

Disallow: /2016/

Disallow: /2017/

Disallow: /*?*

Noindex: /wp-login.php

Noindex: /wp-admin/

Noindex: /send-to/

Noindex: /page/

Noindex: /2015/

Noindex: /2016/

Noindex: /2017/

Noindex: /*?*

Noindex: /ml-thanks/

Noindex: /downloads/

Noindex: /robots.txt

It is important to ensure that you understand what your robots.txt file is doing regardless of whether it is simple or complicated. If you do not understand what your robots.txt is doing, you should seek guidance since the contents of your robots.txt file can greatly impact the success of your HTTPS migration.

3. If it mentions a sitemap

When a robots.txt file points to a sitemap it looks like this:

Disallow: /wp-content/uploads/2009/

Disallow: /wp-content/uploads/2010/

Sitemap: https://example.com/sitemap.xml

The last line of the above example starts with the word “Sitemap:” and is followed by a url. This is the way robots.txt files point to sitemaps. If your file has a line like this it points to a sitemap.

4. If it is blocking page resources

If your robots.txt file is blocking page resources like CSS or Javascript files, it may be negatively affecting your rankings. The Google webmaster guidelines state that you should not block page resources.

Blocked resources can affect the success of an HTTPS switch.

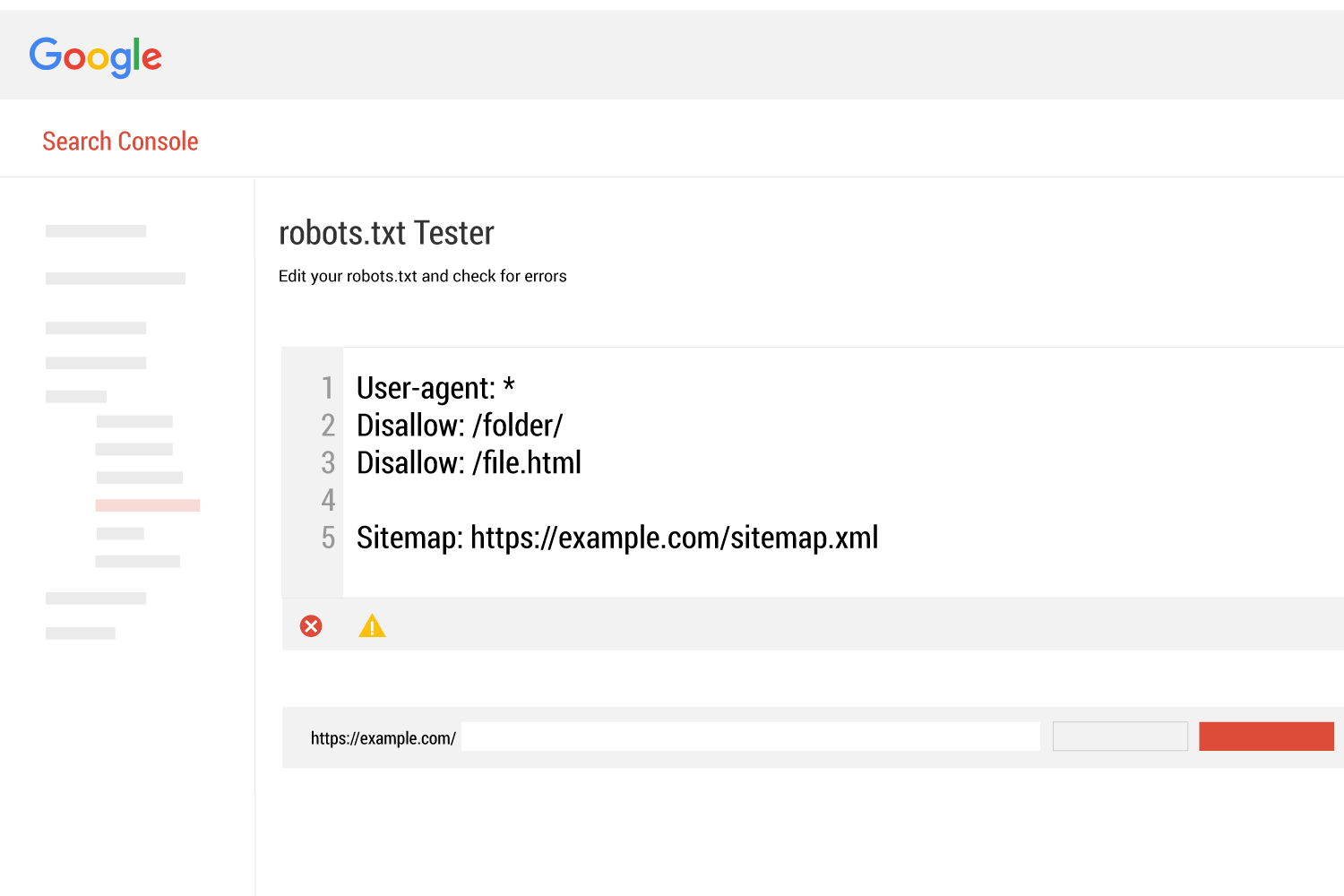

Checking your robots.txt status in Google Search Console

You can get a great overview of your robots.txt file status in Google Search Console.

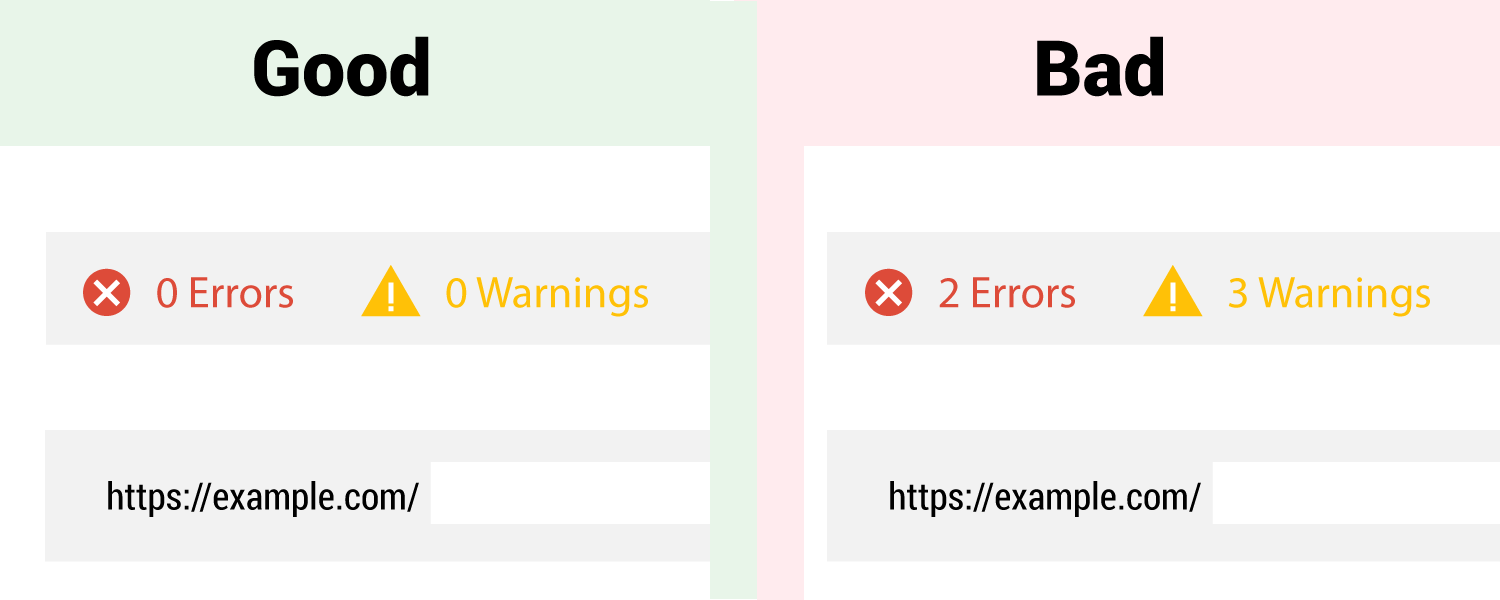

The robots.txt tester page displays your robot.txt file instructions and will also note any errors or warnings.

If you have any errors or warnings they should be addressed prior to your HTTPS migration.

Prepping your HTTPS robots.txt file

Prior to switching to HTTPS you should have a robots.txt file ready to go.

The only difference between your HTTP and HTTPS robot.txt files at this point should be the sitemap link.

Your HTTPS robots.txt should point to your HTTPS sitemap (the HTTPS sitemap should only include HTTPS urls).

We recommend separate robots.txt files for HTTP and HTTPS, pointing to separate sitemap files for HTTP and HTTPS. We also recommend listing a specific URL in only one sitemap file.

The most ideal robots.txt file for an HTTPS switch

The truth is, an empty robots.txt file or one that specifically allows all crawling to all user agents is most ideal for a HTTPS migration.

This is not always possible and most people think that would be crazy. Let’s look at what Google recommends for a HTTPS migration…

On the source site, remove all robots.txt directives. This allows Googlebot to discover all redirects to the new site and update our index.

On the destination site, make sure the robots.txt file allows all crawling. This includes crawling of images, CSS, JavaScript, and other page assets, apart from the URLs you are certain you do not want crawled.

In the Google help documentation, a HTTP to HTTPS is considered a “site move with url changes”. This means that HTTP to HTTPS migration steps are somewhat “lumped” into the same documentation that covers other site moves (like when your domain name changes). This sometimes causes confusion.

Spend time to ensure you know your robot instructions

Many websites take advantage of the robots.txt file and have complex, well thought out and planned robot instructions. Yet if we were to follow the Google help documentation, we would essentially lose all of that structure and it would cause some havoc.

The best bet is to know what your robot instructions are stating and why those instructions are there.

If you are confident that your robots.txt is sound and is not negatively impacting your ranking or indexation, then you are fine keeping it the way it is for your HTTPS switch. This is what most people do.

As stated above, the only real change between your HTTP robots.txt and your HTTPS robots.txt would be the sitemap link.

Bottom Line

It is essential that you are familiar with your robots.txt and confident that what it states is what you want.

During a HTTPS switch, Googlebot will be crawling and recrawling all of your urls and files. The robots.txt file provides instructions to Googlebot and is therefore critical to how your HTTP migration will turn out.

If you find errors, warnings, or blocked content, it is likely worth your time to fix them prior to your HTTPS move. Doing so will present a more ideal version of your site for indexation as HTTPS.

See more of our HTTPS articles

Table of Contents

Table of Contents